Over the past year, members of the People Like You team have been collaborating with Martin Tironí, Matías Valderrama, Dennis Parra Santander, and Andre Simon from the Pontificia Universidad Católica de Chile in Santiago, Chile, on a project called “Algorithmic Identities.” Between the 13th and 20th of January, Celia Lury, Sophie Day, and Scott Wark visited Santiago to participate in a day-long workshop discussing the collaboration to date and to discuss where it might go next.

The Algorithmic Identities project was devised to study how people understand, negotiate, shape, and in turn are shaped by algorithmic recommendation systems. Its premise is that whilst there’s lots of excellent research on these systems, little attention has been paid to how they’re used: how people understand them, how people feel about them, and how people become habituated to them as they interact with online services.

But we’re also interested in how algorithmic recommendation systems might be rendered legible to research. The major online services and social media platforms that people use are typically proprietary. Their algorithms are closely-guarded: we can study their effects on users, but not the algorithms themselves. In media-theoretical argot, they’re “black boxed”.

To study these systems, we adopted a critical making approach to doing research: we made an app. This app, ‘Big Sister’, emulates a recommendation system. It takes text-based user data from one of three sources—Facebook or Twitter, through these services’ Application Programming Interfaces, or a user-inputted text—and runs this data through an IBM service called Watson Personality Insights. This service generates a “profile” of the user based on the ‘big five personality traits’, which are widely used in the business and marketing world. Finally, the user can then connect Big Sister to their Spotify account to generate music recommendations based on this profile.

Our visit to Santiago happened after the initial phase of this project. Through an open call, we invited participants in Chile and the United Kingdom to use Big Sister and to be interviewed about their experience. Using an ethnographic method known as “trace interviews”, in which Big Sister acts as a frame and prompt for exploring participants’ experiences of the app and their relationship to algorithmic recommendation systems in general, we conducted a trial/first set of interviews—four in Santiago and five in London—which formed the basis the workshop.

This workshop had a formal component: an introduction and outline of the project by Martín Tironi; presentations by Celia Lury and Sophie Day; and an overview of the initial findings by Matías Valderrama and Scott Wark. But it also had a discursive element: Tironi and Valderrama invited a range of participants from academic and non-governmental institutions to discuss the project, its theoretical underpinnings, its findings and its potential applications.

Tironi’s presentation outlined the concepts that informed the project’s design. Its comparative nature—the fact that it’s situated in Santiago and in the institutional locations of the People Like You project, London and Coventry—allows us to compare how people navigate recommendation in distinct cultural contexts. More crucially, it implements a mode of research that proceeds through design, or via the production of an app. Through collaborations between social science and humanities scholars and computer scientists—most notably the project’s programmer, Andre Simon—it positions us, the researchers, within the process of producing an app rather than in the position of external observers of a product.

This position can feel uncomfortable. The topic of data collection is fraught; by actively designing an app that emulates an algorithmic recommendation system, we no longer occupy an external position as critics. But it’s also productive. Our app isn’t designed to provide a technological ‘solution’ to a particular problem. It’s designed to produce knowledge about algorithmic recommendation systems, for us and our participants. Because our app is a prototype, this knowledge is contingent and imprecise—and flirts with the potential that the app might fail. It also introduces the possibility of producing different kinds of knowledge.

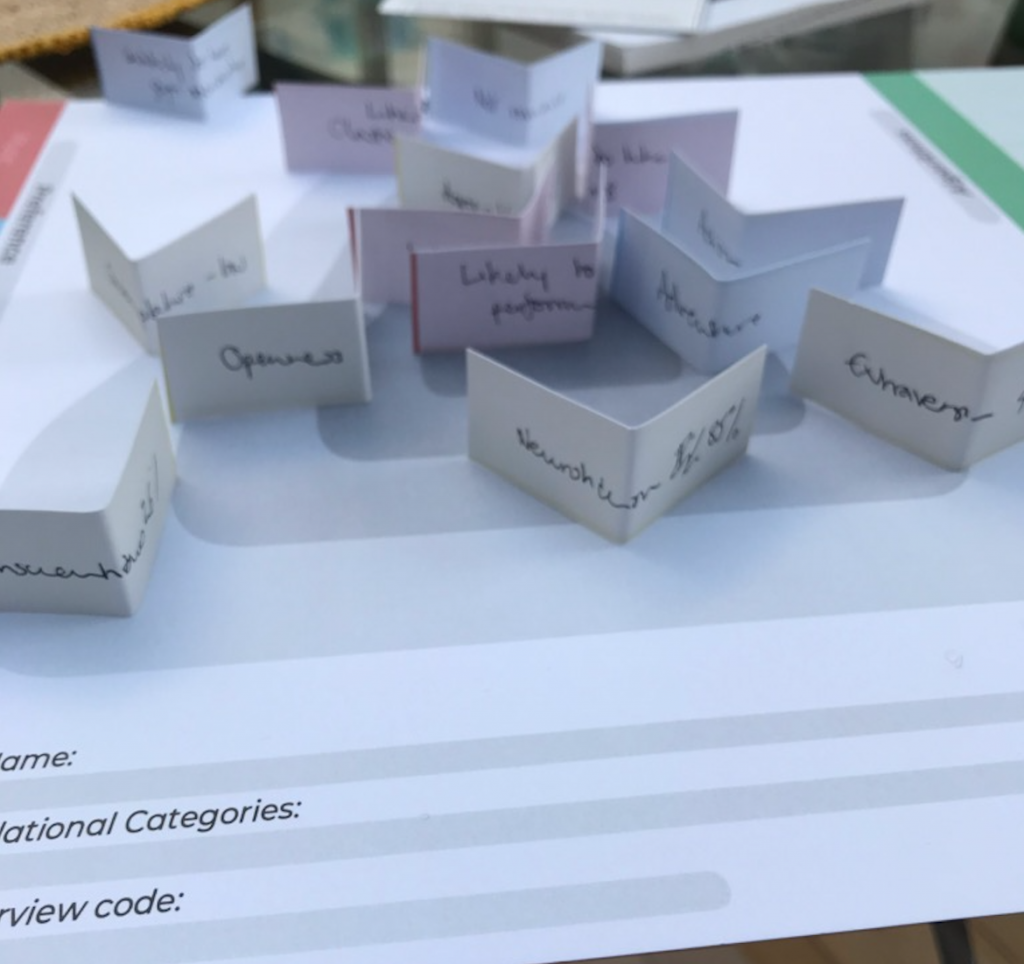

My presentation with Valderrama outlined some preliminary interview findings and emerging themes. Our participants are aware of the role that recommendation systems play in their lives. They know that these systems collect data as the price for the services they receive in turn. That is, they have a general ‘data literacy,’ but tend to be ambivalent about data collection. Yet some participants found the profiling component of our app confronting—even ‘shocking’. One participant in the UK did not expect their personality profile to characterise them as ‘introverted’. Another in Santiago wondered how closely their high degree of ‘neuroticism’ correlated to the ongoing social crisis in Chile, marked by large-scale, ongoing protests about inequality and the country’s constitution.

Using the ‘traces’ of their engagement with the app, these interviews opened up fascinating discussions about participants’ everyday relationship with their data. Participants in both places likened recommendations to older prediction techniques, like horoscopes. They expected their song recommendations to be inappropriate or even wrong, but using the app allowed them to reflect on their data. We began to get the sense that habit was a key emergent theme.

We become habituated to data practices, which are designed to shape our actions to capture our data. But we also live with, even within, the algorithmic recommendation systems that inform our everyday lives. We inhabitthem. We began to understand that our participants aren’t passive recipients of recommendations. Through use, they develop a sense of how these systems work, learning to shape the data they provide to shape the recommendations they receive. Habit and inhabitation intertwine in ambivalent, interlinked acts of receiving and prompting recommendation.

Lury’s and Day’s presentations took these reflections further, presenting some emergent theoretical speculations on the project. Day drew a parallel between the network-scientific techniques that underpin recommendation and anthropological research into kinship. Personalised recommendations work, counter-intuitively, by establishing likenesses between different users: a recommendation will be generated by determining what other people who like the same things as you also like. This principle is known as ‘homophily.’ Day highlighted the anthropological precursors to this concept, noting how this discipline’s deep study of kinship provides insights into how algorithmic recommendation systems group us together. In studies of kinship, ‘heterophily’—liking what is different—plays a key role in explaining particular groupings, but while this feature is mobilised in studies of infectious diseases, for example in what are called assortative and dissassortative mixing patterns, it has been less explicitly discussed in commentaries on algorithmic recommendation systems. Her presentation outlined a key line of enquiry that anthropological thinking can bring to our project.

Lury’s presentation wove reflections on habit together with an analysis of genre. Lury asked if recommendation systems are modifying how genre operates in culture. Genres classify cultural products so that they can be more easily found and consumed. They can be large and inclusive categories, like ‘rap’ or ‘pop’; they can also be very precise: ‘vapourwave,’ for instance. When platforms like Spotify use automated processes, like machine learning, to finesse large, catch-all genres and to produce hundreds or thousands of micro-genres that emerge as we ‘like’ cultural products, do we need to change what we mean by ‘genre’? Moreover, how does this shape how we inhabit recommendation systems? Lury’s presentation outlined another key line of enquiry that we’ll pursue as our research continues.

For me, our visit to Santiago confirmed that the ‘Algorithmic Identities’ project is producing novel insights into users’ relationship to algorithmic recommendation systems. These systems are often construed as opaque and inaccessible. But though we might not have access to the algorithms themselves, we can understand how users shape them as they’re shaped by them. The ‘personalised’ content they provide emerges through habitual use—and can, in turn, provide a cultural place of inhabitation for their users.

We’ll continue to explore these themes as the project unfolds. We’re also planning a follow-up workshop, tentatively entitled ‘Recommendation Cultures,’ in London in early 2020. Our work and this workshop will, we hope, reveal more about how we inhabit recommendation cultures by exploring the relations between personalised services and the people who use them. Rather than simply existing in parallel with each other, we want to think about how they emerge together in parallax.